Export last hour CSV object log file to external or local FTP server from LogicMachine #

Task #

Once an hour, create a CSV file with all object logs and send

to an external FTP server with IP 192.168.1.11, login ftplogin,

password ftppassword. In case you want to download the file

manually from LM local FTP server, it is available in

directory /home/ftp

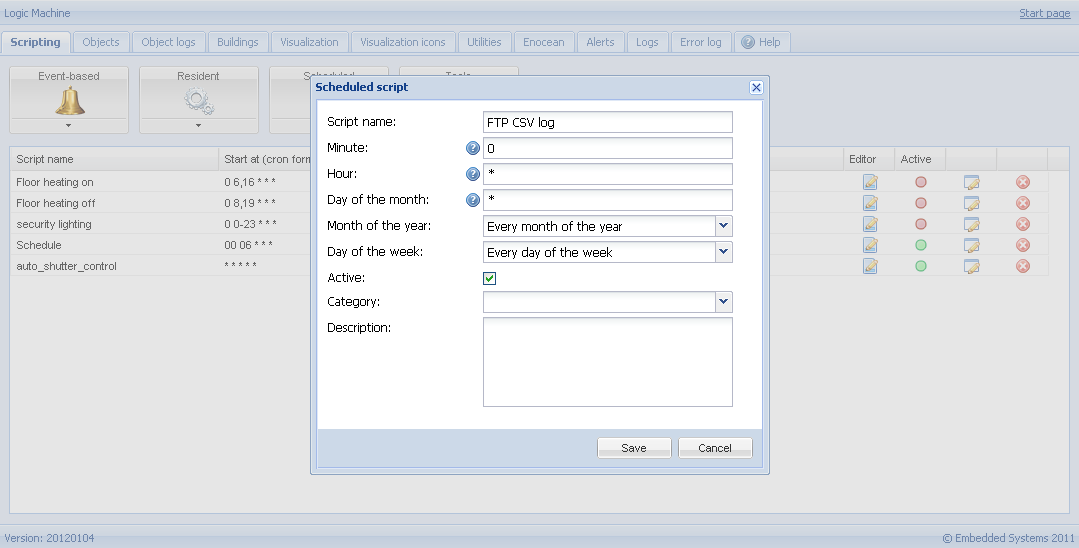

Scheduled script #

In Scripting -> Scheduled add the script which will run once an hour and add following code into the Script editor.

require('socket.ftp')

-- ftp file

url = 'ftp://ftplogin:ftppassword@192.168.1.11/%s.csv'

date = os.date('%Y-%m-%d_%H-%M')

ftpfile = string.format(url, date)

-- get past hour data (3600 seconds)

logtime = os.time() - 60 * 60

-- csv buffer

buffer = {

'"date","type","destination","name","datatype",' ..

'"value","source","sender","login/meta"'

}

-- get object logs

query = [[

SELECT ol.*, o.name, o.datatype

FROM objectlog ol

LEFT JOIN objects o ON ol.address=o.id

WHERE logtime >= ?

ORDER BY id DESC

]]

function fmtdpt(dpt)

if dpt >= 1000 then

dpt = string.format('%0.3f', dpt / 1000)

end

return dpt

end

for _, row in ipairs(db:getall(query, logtime)) do

id = tonumber(row.address) or 0

ia = tonumber(row.src) or 0

logdate = os.date('%Y.%m.%d %H:%M:%S', math.floor(row.logtime))

etype = row.eventtype

textdpt = nil

if (etype == 'write' or etype == 'response') and row.datatype then

textdpt = fmtdpt(row.datatype)

value = grp.decodevalue(row.datahex, row.datatype)

else

value = ''

end

buffer[ #buffer + 1 ] = string.format('%q,%q,%q,%q,%q,%q,%q,%q,%q',

logdate,

etype,

buslib.decodega(id),

row.name or '',

tostring(textdpt or ''),

tostring(value),

ia > 0 and buslib.decodeia(ia) or 'local',

row.sender or '',

row.meta or ''

)

end

-- upload to ftp only when there's data in buffer

if #buffer > 1 then

res, err = socket.ftp.put(ftpfile, table.concat(buffer, '\r\n'))

end

-- error while uploading

if err then

alert('FTP upload error: %s', tostring(err))

end

Write to an existing FTP file #

Replace these lines:

if #buffer > 1 then

res, err = socket.ftp.put(ftpfile, table.concat(buffer, '\r\n'))

end

With these:

if #buffer > 1 then

data = table.concat(buffer, '\r\n')

ltn12 = require('ltn12')

res, err = socket.ftp.put({

host = '192.168.1.40',

user = 'ftp',

password = 'ftp',

command = 'appe',

argument = 'log.csv',

source = ltn12.source.string(data)

})

end

Local FTP CSV storage #

Modify the original script as follows.

Step 1 #

Replace this line:

url = 'ftp://ftplogin:ftppassword@192.168.1.11/%s.csv'

With this:

url = '/home/ftp/%s.csv'

Step 2 #

Replace these lines:

-- upload to ftp only when there's data in buffer

if #buffer > 1 then

result, err = socket.ftp.put(ftpfile, table.concat(buffer, '\r\n'))

end

-- error while uploading

if err then

alert('FTP upload error: %s', tostring(err))

end

With these:

if #buffer > 1 then

data = table.concat(buffer, '\r\n')

io.writefile(ftpfile, data)

end

Step 3 #

Create a scheduled script that runs once a day to remove old logs (60 is a number of days to keep old logs):

function cleanup(dir, days)

local now, files, mtime, delta

now = os.time()

files = io.ls(dir) or {}

for _, file in ipairs(files) do

file = dir .. '/' .. file

_, mtime = io.stat(file)

if mtime then

delta = (now - mtime) / 86400

if delta > days then

os.remove(file)

end

end

end

end

cleanup('/home/ftp', 60)